In the rapidly evolving field of Generative AI (GenAI), organizations face a critical challenge: how to leverage state-of-the-art AI capabilities while maintaining flexibility and avoiding vendor lock-in?

In this blog, we explore the compelling case for adopting cloud-native, open source technologies in GenAI deployments, offering a path to independence from both cloud providers and large language model (LLM) providers.

Firstly, it is important to differentiate between two main types of service providers in the GenAI ecosystem:

- LLM Providers like OpenAI, Anthropic and Google that develop and offer access to large language models through APIs, but also offer the ability to use their interface through expensive enterprise agreements.

- Cloud infrastructure providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP), which offer underlying computing resources and services, including LLMs.

Vendor dependence in the context of GenAI

While both types of providers offer valuable services, relying too heavily on either can lead to significant limitations and risks for businesses, particularly in the context of vendor dependence:

- Financial Considerations

Enterprise agreements with LLM providers, like OpenAI or Anthropic, can become exceptionally costly as usage scales. These agreements often allow employees to use LLM services privately, but the per-user costs can add up quickly for large organizations. Similarly, long-term commitments to specific cloud infrastructure providers may not always align with evolving business needs.

- Limited Flexibility

Exclusive reliance on a single LLM provider restricts an organization's ability to leverage diverse models or adapt to emerging technologies. This limitation applies not only to API usage, but also to the models available for private use by employees. Likewise, deep integration with a specific cloud provider's proprietary services can hinder portability.

- Technological Constraints

As the field of GenAI advances rapidly, being tied to a single provider's technology stack may prevent organizations from adopting more efficient or capable solutions as they emerge. This is particularly relevant for LLM providers, whereby new models and capabilities are constantly being developed.

- Data Control and Privacy

Utilizing third-party APIs or cloud-hosted solutions for LLMs may raise concerns about data privacy and control, particularly for sensitive or proprietary information. This is especially critical when employees are using these services for internal communications and projects.

The cloud-native paradigm: A path to GenAI independence

To address these challenges, organizations are increasingly turning to cloud-native architectures built on open source technologies. This approach offers a compelling solution for deploying GenAI applications with greater flexibility, scalability, and independence.

What is cloud-native?

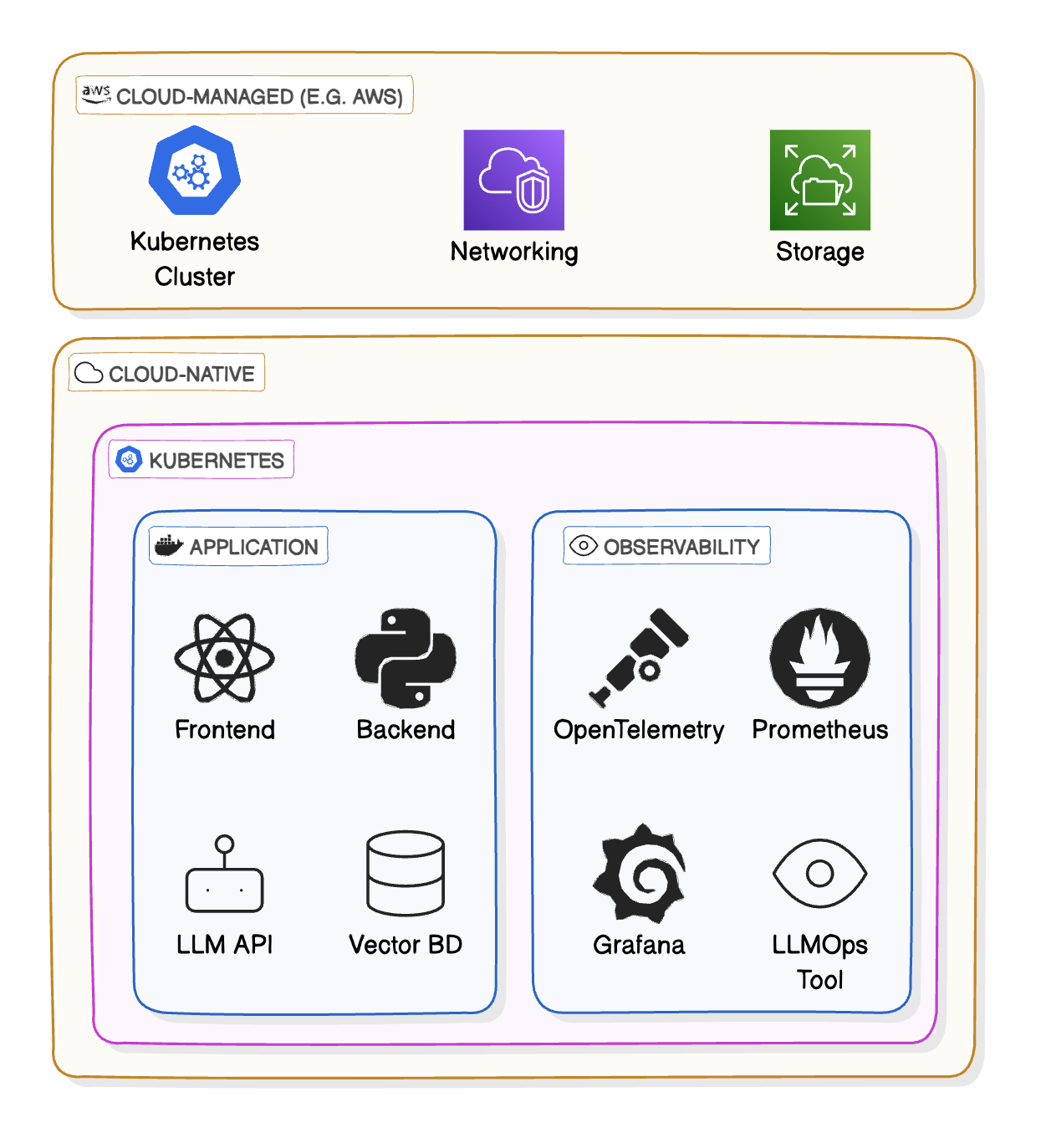

Cloud-native refers to a set of open source technologies and methodologies designed for building and running applications that fully leverage the advantages of cloud computing. At its core is Kubernetes, an industry-standard container orchestration platform that manages containerized workloads and services across clusters of machines.

Surrounding Kubernetes are various complementary projects organized into categories such as container runtimes, networking, service mesh, monitoring, logging, and continuous integration/continuous deployment (CI/CD). Together, these technologies form a cohesive ecosystem that enables organizations to build, deploy, and manage resilient, scalable, and agile cloud-agnostic applications.

Advantages of Open Source GenAI applications

- Vendor Independence

Open source cloud-native technologies eliminate lock-in to any single cloud infrastructure or LLM provider, allowing organizations to easily switch or combine services as needed.

- Scalability

GenAI applications often experience variable demand. Cloud-native architectures excel at dynamically scaling resources up or down to meet these fluctuations efficiently.

- Resilience

Built with fault tolerance in mind, cloud-native deployments minimize downtime and ensure high availability of GenAI services.

- Hybrid and Multi-Cloud Flexibility

Cloud-native technologies enable seamless deployment across multiple cloud providers or in hybrid, on-premise/cloud setups.

- Cost Optimization

While pay-as-you-go models can be cost-effective for low volumes, cloud-native deployments often turn out to be cheaper at scale, especially for compute-intensive GenAI workloads or customer facing products with a lot of traffic. For internal products at large enterprises, this can also be very beneficial.

- Open Source Innovation

By leveraging open source technologies, organizations can benefit from rapid innovation and community-driven improvements in the GenAI space. While proprietary LLM providers like OpenAI and Anthropic often top the charts, the open source world of tools around these models is quite large and often has the best of both worlds, e.g. combining Antrhopics ‘artifacts’ with OpenAI’s new o1-preview model.

Key components of cloud-native GenAI deployments

Containerization and Orchestration

Containerization involves packaging applications and their dependencies into isolated environments, ensuring consistency across different deployment environments. Kubernetes, as a container orchestration platform, automates the deployment, scaling, and management of these containerized applications.

Observability

In the complex landscape of GenAI applications, visibility into system performance is crucial. Cloud-native observability tools provide:

- Logging: Capture and analyze events and errors across the GenAI pipeline

- Metrics: Monitor quantitative data such as inference latency, model accuracy, and resource utilization

- Tracing: Understand request flows across microservices in the GenAI architecture

OpenTelemetry is an open standard which can be used to standardize how observability-related data is ingested. When you design your application to use it, you get the flexibility to whichever observability platform you want to use:

- Cloud managed platforms like [AWS, Azure tools, etc.]

- SaaS solutions like Datadog

- Self-hosted, cloud-native solutions like the Grafana stack

The open source world also offers an extensive ecosystem of so-called LLMOps tools that give visibility into the functional performance of your GenAI application. Examples are LangFuse, LangWatch and Phoenix. Consider setting up such a tool from the start of your project to ensure robust assessment of your use case and metrics-driven development.

Vector Databases

Many GenAI applications, particularly those utilizing Retrieval-Augmented Generation (RAG), require efficient vector databases. Cloud-native deployments offer flexibility in choosing and scaling vector database solutions. Open source options such as ChromaDB, Weaviate, and Milvus can be easily deployed on Kubernetes. Read more about vector databases here.

Practical implementation: Open-source alternatives to proprietary solutions

To illustrate the potential of open source, consider two open source projects that exemplify this approach:

- OpenWebUI: An extensible UI with features like RAG, function calling and tool use. This project makes it very easy to set up your own interface with Ollama.

- LibreChat: Another ChatGPT-like UI with a lot of features, including easy model selection and RAG.

These projects showcase how organizations can create secure, privately-hosted GenAI solutions that can access internal data sources and adapt to specific business needs, all while maintaining independence from proprietary vendors.

Deploying these applications effectively within your organization can be challenging. This is where expert guidance can make a significant difference.

Xomnia specializes in helping organizations set up their own internal and private ChatGPT-like systems, tailored to specific business needs and integrated with private data.

Future-proofing GenAI investments with cloud-native architectures

In the rapidly evolving landscape of Generative AI, we recommend maintaining flexibility and independence. Cloud-native deployments, built on open source technologies and container orchestration platforms like Kubernetes, offer the perfect solution for organizations looking to leverage GenAI while avoiding the pitfalls of vendor lock-in or paying high subscription fees per user per month.

This approach not only future-proofs GenAI investments, but also provides the necessary tools to manage costs, ensure security, and scale effectively as use cases grow. Whether deploying API-based services or self-hosting large language models, cloud-native architectures provide the foundation for success in the dynamic world of generative AI.