With the ongoing adoption of Generative AI in our professional and personal lives, English remains the main language used in chatbot conversations. This is because Large Language Models (LLMs) derive their capabilities from training on data published on the internet, which, in turn, is mostly written in English.

For instance, the pre-training dataset of GPT-3 (2020) contains only about 7% non-English tokens, while Meta AI’s Llama 3 (2024) has around 8%. This minimal increment highlights the persistent imbalance in LLM’s training data. Due to this training data imbalance, LLMs exhibit a performance degradation when used in foreign languages. The magnitude of this performance degradation is hard to estimate, as multilingual evaluation is frequently performed with machine-translated English datasets. This pinpoints a larger problem present in the current AI ecosystem: the scarcity of high-quality, non-English training data and benchmarks to train and evaluate model capabilities.

This blog explores initiatives that try to accelerate multilingual LLMs, with a particular emphasis on the state of the Dutch LLM ecosystem. It also explores the status of LLM evaluation and training benchmarks for non-English languages. We conclude with recommendations for those interested in supporting efforts that aim to increase the representation of other languages within different large language models.

The case for multilingual (and Dutch) Large Language Models

At the beginning of 2024, the Dutch government published their government-wide vision on Generative Al for individuals, business, government, and society as a whole. In this document, they propose four principles on which they believe the development, use, and embedding of Generative AI should be based:

- Generative AI is developed and applied in a safe way

- Generative AI is developed and applied equitably

- Generative AI serves human welfare and safeguards human autonomy

- Generative AI contributes to sustainability and prosperity

One of the dimensions of principle II is opportunity equality. The Dutch government recognizes opportunity equality may be under pressure due to unequal access to Generative AI, due to factors such as income differences and digital skills gap. While unequal access due to language barriers is not explicitly mentioned, the lack of multilingual AI means that the full benefits of LLMs are only accessible to citizens sufficiently proficient in English.

Although GPT, LLama, and other frontier models are capable of generating coherent multilingual responses, it is hard to precisely assess how well these models understand Dutch and how they acquired language specific proficiency. And while OpenAI narrows the gap among languages with the release of GPT-4o, there still remain differences in performance between English and other languages.

These differences between languages not only result in opportunity inequality for non-English speakers, but also affect businesses looking to embed Generative AI in their services. This is because businesses may run into mixed results based on the language used in chat conversations. In other cases, businesses are constrained to non-English documents that function as the knowledge base for the LLMs.

GPT-NL: A transparent and fair Dutch LLM

Given these challenges, it is promising to see that the Dutch government has initiated the GPT-NL project and committed to funding it. GPT-NL is a collaboration between Dutch research institute TNO, the forensic institute NFI, and education and research organization SURF. This collaboration aims to develop a Dutch LLM that is based on the transformer architecture that powers ChatGPT. These parties aim to develop a model that approaches a 'gpt-3.5-turbo' level of performance but that is built for transparent, ethical, and verifiable use of AI. This model will also be aligned with Dutch and European values, standards, and data ownership principles. Moreover, it aims to decrease the reliance on big US tech players by offering a transparent alternative.

To achieve these goals, the GPT-NL model will not be a fine-tuned version of an existing model. Instead, it will be created from scratch by rigorously curating a dataset of about 500 billion tokens, and training a language model on this dataset. More precisely, GPT-NL will have two models, as its creators aim for a smaller model in the range of 7B parameters and a larger model in the range of 70B parameters (i). This setup of two models follows an approach also seen in frontier models such as Llama from Meta AI. In this multiple-model setup, a smaller model usually addresses local device use cases, whereas the heavier model addresses uses cases that require state-of-the-art intelligence, such as agentic AI (ii). The GPT-NL models will be trained on a 50/50 split of Dutch and English text, and following their release, the creators plan to host a fine-tuning cluster for interested parties to create fine-tuned variants of GPT-NL.

The GPT-NL initiative aims to be as transparent as possible, meaning that its dataset- and model-cards, training frameworks, and the code used to create the training dataset will all be published. The intention to release the training dataset is especially noteworthy. Often, commercial AI vendors refrain from doing this as the dataset is their 'secret sauce', and giving this away could see them lose their competitive edge. However, due to the nature of GPT-NL, this issue does not affect its creators. To conform to GDPR, intellectual property law, and the EU AI Act, the data curation preprocessing will detect and remove harmful text bias, and Personal Identifiable Information (PII). For PII detection and removal, named entity recognition models will be used to identify names, distinguish between public- and non-public names and pseudo-anonymize non-public names.

GPT-NL’s creators aim to finish dataset curation and start training the model in 2024 so that it can be released in 2025. Realistically, this means that when GPT-NL will be published, it will have been over 2 and a half years since gpt-3.5-turbo (the initial ChatGPT) was released. With the pace in which new models are currently being released, much more capable, English-focused models will likely be available by then. As such, companies in the position to use state-of-the-art models from commercial vendors might have to make a trade-off: A GPT-NL model will align better with Dutch and EU regulations and standards, whereas a commercial model will yield better results.

Commercial and open-source initiatives in multilingual LLM development

Whilst the Dutch government is developing GPT-NL, both commercial model vendors and the AI open-source community are also putting in an effort to accelerate the development of multilingual AI. While commercial model vendors aim to develop multilingual models by including a larger share of non-English text in the training data, open-source efforts often focus on fine-tuning open-weight (iii) models on specific languages.

An example of a commercial model vendor that focuses on the multilingual aspect of LLMs is Cohere. According to Cohere, their command-r-plus model performs well on 10 key business languages. In addition, their non-profit research lab recently launched Aya, a multilingual language model which follows instructions in 101 languages. To train their Aya model, they crowd-sourced a multilingual Instruction Fine Tuning (IFT) dataset, working with fluent speakers of languages from around the world to collect natural instances of instructions and completions. As a result, the Aya dataset is the largest human-annotated multilingual instruction fine tuning dataset to date, consisting of over 204K instances that cover 65 languages.

An example of an open-source effort to train a Dutch LLM is Geitje, which is based on the Mistral-7B model and developed by Edwin Rijgersberg. Geitje-7B was trained on the Dutch subset of two public datasets, Gigagorpus and MADLAD-400. Geitje-7B is a so-called foundation model, which means it has only completed the first phase of LLM development, called pre-training (iv). Next, Geitje-7B-chat and Geitje-7B-Ultra were created by fine tuning the model on chat conversations in the so-called post-training phase (v).

To assess the capability of this model in Dutch, one may want to evaluate the performance on a benchmark, which brings us to another challenge stemming from the lack of multilingual datasets in today’s AI ecosystem…

Evolving methods in LLM evaluation for non-English languages

The evaluation of large language models is still a nascent field, and automatic benchmark testing and reporting are currently the primary method of evaluation. However, the AI community has grown increasingly skeptical of LLM benchmark evaluations on datasets, such as MMLU, Humaneval, and others, irrespective of the language in question. This is largely due to the manipulation of benchmarks to falsely inflate progress and performance.

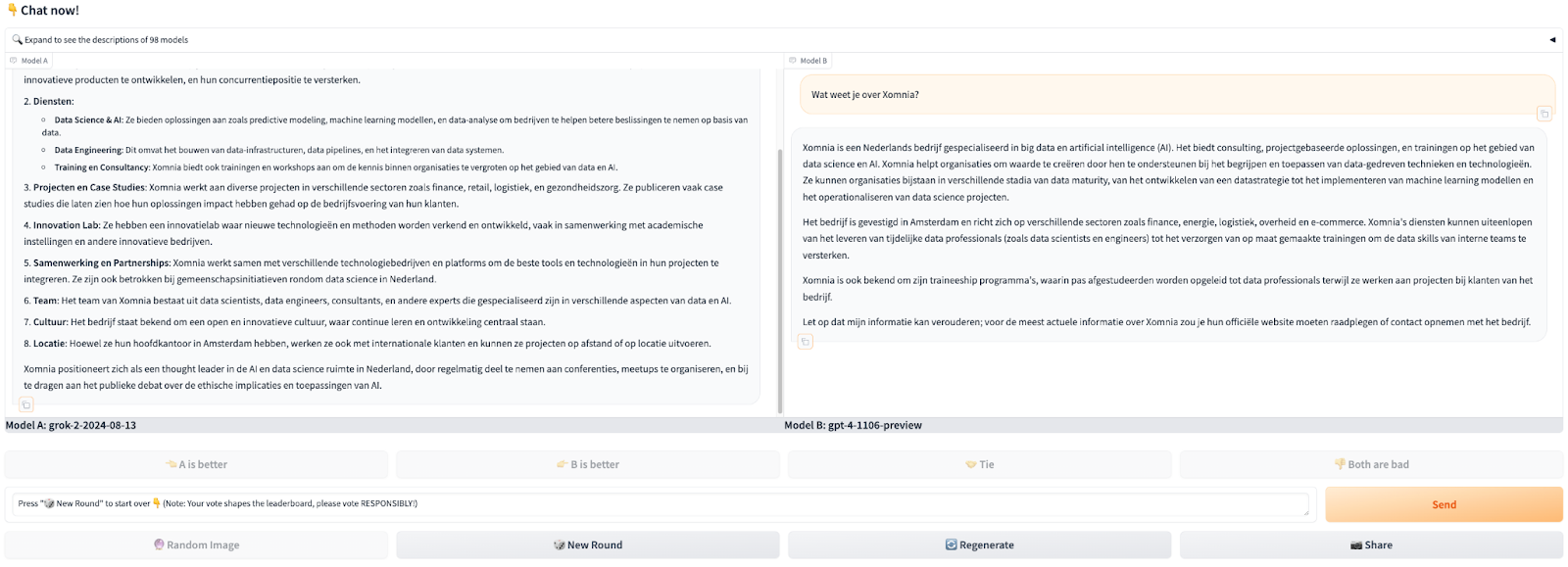

To address this issue, Chatbot Arena has emerged as an alternative evaluation method. It is an open-source, collective human evaluation leaderboard started as a research project. On this platform, users ask the same question to two unknown LLMs and then vote which response they prefer. At the time of writing this, a multilingual alternative to Chatbot Arena does not exist.

Regarding automatic benchmarks, the ScandEval initiative has emerged as a Scandinavian and Germanic alternative to English benchmarks. It provides a benchmarking package, and has created Scandinavian and Dutch versions of popular benchmark datasets through machine translation. This method comes with drawbacks, however, as it results in evaluating a model’s Dutch proficiency on text that is originally written in English. Examining the ScandEval Dutch NLG leaderboard, this may clarify why the original Mistral-7B model, which serves as the foundation for the Geitje-7B-Ultra, outperforms the Geitje-7B-Ultra despite the latter being fine tuned on Dutch.

How to contribute to the development of multilingual LLMs?

The lack of natively constructed multilingual benchmarks means it is hard for individuals and businesses to compare and evaluate a model's suitability for their case. Luckily, there are various initiatives underway that try to change this:

Aya Platform by Cohere for AI.

For the aforementioned Aya model, Cohere for AI leans heavily on the Aya platform for both model evaluation and dataset curation. On this platform, users can rate model performance, contribute to their native language, and review user feedback. In addition, they host a discord community with initiatives to accelerate multilingual AI.

Occiglot.eu

Occiglot, a research collective for open-source development of LLMs by and for Europe, strives for dedicated language modeling solutions to maintain Europe’s academic and economic competitiveness and AI sovereignty. For model evaluation, they seek collaborators with an intimate understanding of their local language and culture to set up better evaluation suites.

Data is Better Together (DIBT)

DIBT is a community effort and collaboration between HuggingFace and Argilla, an open-source ML community platform. One project of DIBT is the Multilingual Prompt Evaluation Project (MPEP), where a community of AI enthusiasts translate and create language-specific benchmarks for open LLMs. The Dutch translation project has finished, and the datasets have been uploaded to HuggingFace.

Concluding thoughts

Overall, various initiatives looking to increase the multilingual proficiency of LLMs have emerged, ranging from GPT-NL, to commercial vendors, to open-source initiatives. It's exciting to see multilingual and Dutch LLM development gaining traction with a community-focused approach.

In the short-term, more non-English datasets are needed to increase the non-English share in the data mix on which LLMs are trained. Secondly, natively constructed benchmarks should be developed, enabling more accurate evaluation of LLM performance in those languages. In the ever-changing Generative AI landscape, it will be interesting to see if the open-science community, governments, or commercial model vendors will find a way to make everyone benefit from the opportunities offered by generative AI.

For enterprises looking to incorporate non-English LLMs in their business, we recommend taking the following aspects into consideration:

- Evaluation. Since public multilingual evaluation benchmarks are mostly based on machine translation, we recommend developing a custom evaluation set catered to your use case, in addition to public benchmarks.

- Prompt engineering. Do not overlook the importance of prompt engineering, which involves crafting and refining the instructions given to an LLM. By including examples of the same question in various languages, the likelihood of the LLM responding in the user’s requested language can be increased. This approach is particularly helpful when deploying a single model in a multilingual use case.

- Considerations for model selection. Given the rapid pace of new LLM releases, model-specific recommendations can quickly become outdated. On a vendor level, OpenAI remains a strong option, and other notable players include Mistral, Meta AI, and Anthropic. According to Mistral's release blog, Meta AI's latest LLM currently performs best in Dutch, with Mistral's model following closely. Besides evaluating performance, we recommend taking other constraints, such as data privacy requirements, into consideration. For example, if your data must remain within the EU, ensure the model can be hosted in an EU cloud region by your cloud provider.

- Trustworthy AI. While OpenAI boasts state-of-the-art performance with their models, it's important to acknowledge the increasing number of copyright lawsuits filed against them and other model vendors. If your Generative AI use case must adhere to copyright and data residency considerations, we recommend, for instance, staying updated on GPT-NL’s developments. GPT-NL is committed to creating a reliable model aligned with the EU's Ethics Guidelines for Trustworthy AI, ensuring systems are lawful, ethical, and robust.

Xomnia is the leading data and AI consultancy in The Netherlands. If your business requires help with exploring or building a Generative AI use case, contact us!

Footnotes:

(i) The number of parameters in a model is a measure of the size and complexity of the LLM. Generally, the larger the parameters (in combination with more training data) the more complex and powerful the LLM.

(ii) Agentic AI: An AI agent is a software program designed to interact with its environment, gather data, and utilize that data to carry out tasks autonomously to achieve specific objectives. While humans define the goals, the AI agent independently determines the optimal actions necessary to accomplish them.

(iii) Open-weights model: A model whose architecture and weights (parameters) are freely available to download with minimal or no restrictions.

(iv) In pre-training, a model is merely trained on next token prediction. This results in a model that is not suitable for chat conversations, as it is simply configured to return the next token.

(v) In post-training, a model is first instruction-tuned with chat conversations to better follow instructions. Next, reinforcement learning is performed to encourage desired behavior with RLHF or Direct Preference Optimization (DPO).